The concept of containers has been a hot topic for those in the server space, but less so in the End-User technology space where Juriba spends its time. However, this is beginning to change with some desktop infrastructure type services now supporting the concept of containers to scale in the cloud. When most people think of containerization, they think of Kubernetics or Docker. But did you ever think about Microsoft or Windows and containerization?

As this technology is increasingly important in the Microsoft universe, we thought it might be useful to take a few minutes to go through the basics of containers and explain how containerization differs from virtualization before diving into what Microsoft has been working on.

What Is Containerization?

Containerization isn't a new concept. In fact, the concept of containers was first used in the 1970s. In 2008, the first and most complete implementation of Linux's container manager was the closest thing to what we now call containerization today. It allowed you to work on a single Linux Kernel without patches using cgroups and Linux namespaces with the help of LXC (LinuX Containers).

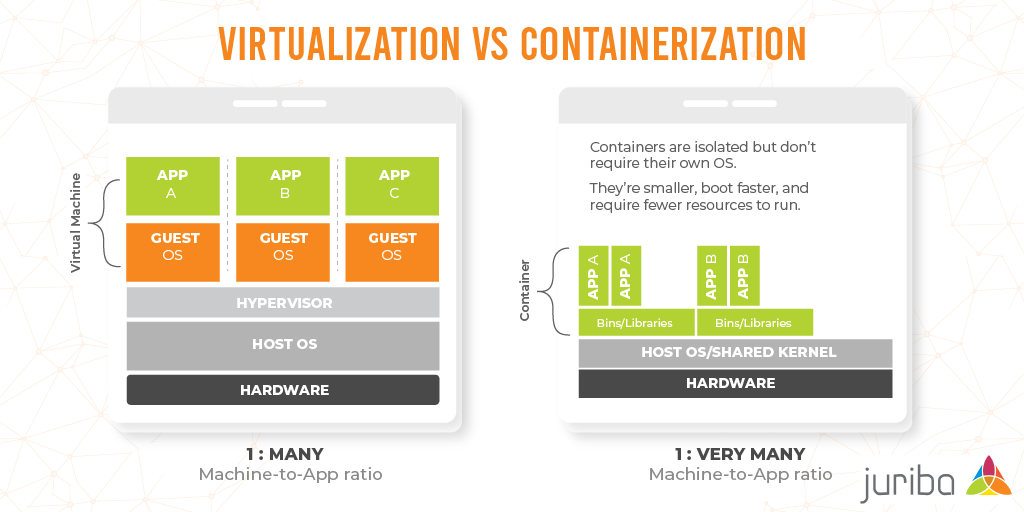

To understand what a container is, it is the easiest to contrast and compare it to virtualization.

Virtualization, whether it is on-premises or in the cloud, relies on a hypervisor sitting on top of the host operating system to virtualize the physical hardware. You can think of a virtual machine as a self-contained computer that has been packed into a single file. The hypervisor on top of it allows it to run. This can either happen by directly linking into the underlying infrastructure (e.g., Hyper-V) or it can be run as an app in the host OS (e.g., VMWare).

To virtually run an application, you will also need a guest operating system and a virtual copy of the hardware. The problem here is that the guest OS for each application consumes a lot of CPU and memory resources (e.g., min. 400 MB each = 1.2 GB for 3 apps). In addition, each application requires its own set of libraries and dependencies (e.g., DB connection library, Python or NodeJS packages) required to run the app.

This means there is a lot of wasted resources, it is harder to scale, and things can break when deployed to a VM (also known as "dependency hell" and "it worked on my laptop") which hinders continuous agile DevOps and delivery. It is also the main reason why many corporates are not seeing the return on investment that they expected by virtualizing some of their PC estates.

Containers, on the other hand, run a Container Runtime Engine (e.g., Docker Engine) on top of the host OS and the underlying infrastructure. On top of that sit the Binaries and Libraries. But rather than requiring a guest OS, they are built into special packages. For the Docker, for example, those would be Docker Images. Finally, the application resides in its own Docker Image, allowing it to be managed independently by the runtime engine.

Essentially, this means that there are a lot fewer moving parts — making it faster, more lightweight, and less resource-intensive than VMs. Consequently, the smaller size results in faster spin ups. Containers also better support cloud-native apps scaling horizontally. Because containers are self-contained, they can be run anywhere regardless of the platform, making them an ideal candidate for modern agile development and application patterns, like DevOps, microservices, or serverless applications.

To sum it up, Virtual Machines have their advantages when isolating entire systems, but containers are a better choice for isolating applications as they can handle a much bigger machine-to-app ratio. In order to isolate applications in containers, they have to be designed and packaged (including all the relevant libraries, software dependencies, environment variables, and configuration files) differently to take advantages of containers, which is called containerization.

How Microsoft Uses Containers

While we often think of Kubernetics and Docker when thinking about containerization, some people are surprised to learn that not only does Microsoft use five different container models and have two different container use cases, but they are most likely already are using containerization. For example, you are using this technology when you wrap and isolate your UWP apps or when you are using thin virtual machines to deliver security.

(Image Credit: Microsoft 2019)

Microsoft defines containers as:

"[...] a technology for packaging and running Windows and Linux applications across diverse environments on-premises and in the cloud. Containers provide a lightweight, isolated environment that makes apps easier to develop, deploy, and manage. Containers start and stop quickly, making them ideal for apps that need to rapidly adapt to changing demand. The lightweight nature of containers also makes them a useful tool for increasing the density and utilization of your infrastructure."

The Microsoft container ecosystem contains a number of ways you can develop and deploy applications using containerization:

- You can run Windows-based or Linux-based containers on Windows 10 using Docker Desktop or you can run containers natively on Windows Server.

- Using the container support in Visual Studio and VS Code, you can develop, test, publish, and deploy Windows-based containers. This includes support for Docker, Docker Compose, Kubernetes, Helm, and other useful technologies.

- If you want to allow others to use your applications, you can publish them as container images to the public DockerHub. If your application is only for internal development and deployment, you can publish it on your private Azure Container Registry.

- You can deploy your containers at scale on Azure or other clouds by pulling your container images from a container registry (e.g., Azure Container Registry) and deploying/managing them via an orchestrator like Azure Kubernetes Service or Azure Service Fabric. Or you can deploy containers to Azure virtual machines as a customized Windows Server image (Windows app) or a customized Ubuntu Linux image (Linux app) and manage them at scale using Azure Kubernetes Service.

- Lastly, if you are looking to deploy containers on-premises, you can do so by using Azure Stack with the AKS Engine or Azure Stack with OpenShift. In addition, you could set up Kubernetes yourself on Windows Server. In the future, Microsoft is aiming to support Windows containers on RedHat OpenShift Container Platform as well.

In addition to Kubernetes, you have two different isolation options on Windows Server: process isolation or with Hyper-V. With process isolation, you can use the same kernel for all images and the host to run multiple images on one host OS. Use this approach if you know which processes you will need to run on the server and if you can ensure that no information can leak between the different container images, as there is a small security risk when sharing the same kernel.

Alternatively, you can go the Windows Server's isolated container route using Microsoft's Hyper-V. Technically, each container is a virtual machine with its own kernel as Microsoft is running a thin OS layer on top of Hyper-V to host a Docker container image. This approach adds a layer of hardware isolation between container images, making it more secure.

Conclusion

While containerization could be seen as the next evolutionary step in virtualization, each technology has its place. However, containers allow you to develop and deploy applications faster and in a more scalable and more agile way. In the desktop space, we are wondering if containers will eventually replace the dedicated and non-persistent VDI machines that we run today for our end users. That decision will become easier as organizations rationalize and reduce their application estate to a point where for some users this will be a workable solution. For now, containerization for high volume activity such as application smoke testing against a patch or Windows 10 servicing release might be a good option.

We will keep a close eye on how Microsoft further develops their containerization strategy with the upcoming announcements around MSIX and Windows 10X, but in the meantime, it is a great idea to familiarize yourself with the concept, use cases, and benefits that containerization can bring to the table.

Barry is a co-founder of Juriba, where he works as CEO to drive the company strategy. He is an experienced End User Services executive that has helped manage thousands of users, computers, applications and mailboxes to their next IT platform. He has saved millions of dollars for internal departments and customers alike through product, project, process and service delivery efficiency.

Topics:

![What is a Digital Workplace? [Definition]](https://blog.juriba.com/hs-fs/hubfs/What%20is%20a%20Digital%20Workplace%20%5BDefinition%5D.jpg?width=1600&height=900&name=What%20is%20a%20Digital%20Workplace%20%5BDefinition%5D.jpg)